- cross-posted to:

- aistuff@lemdro.id

- cross-posted to:

- aistuff@lemdro.id

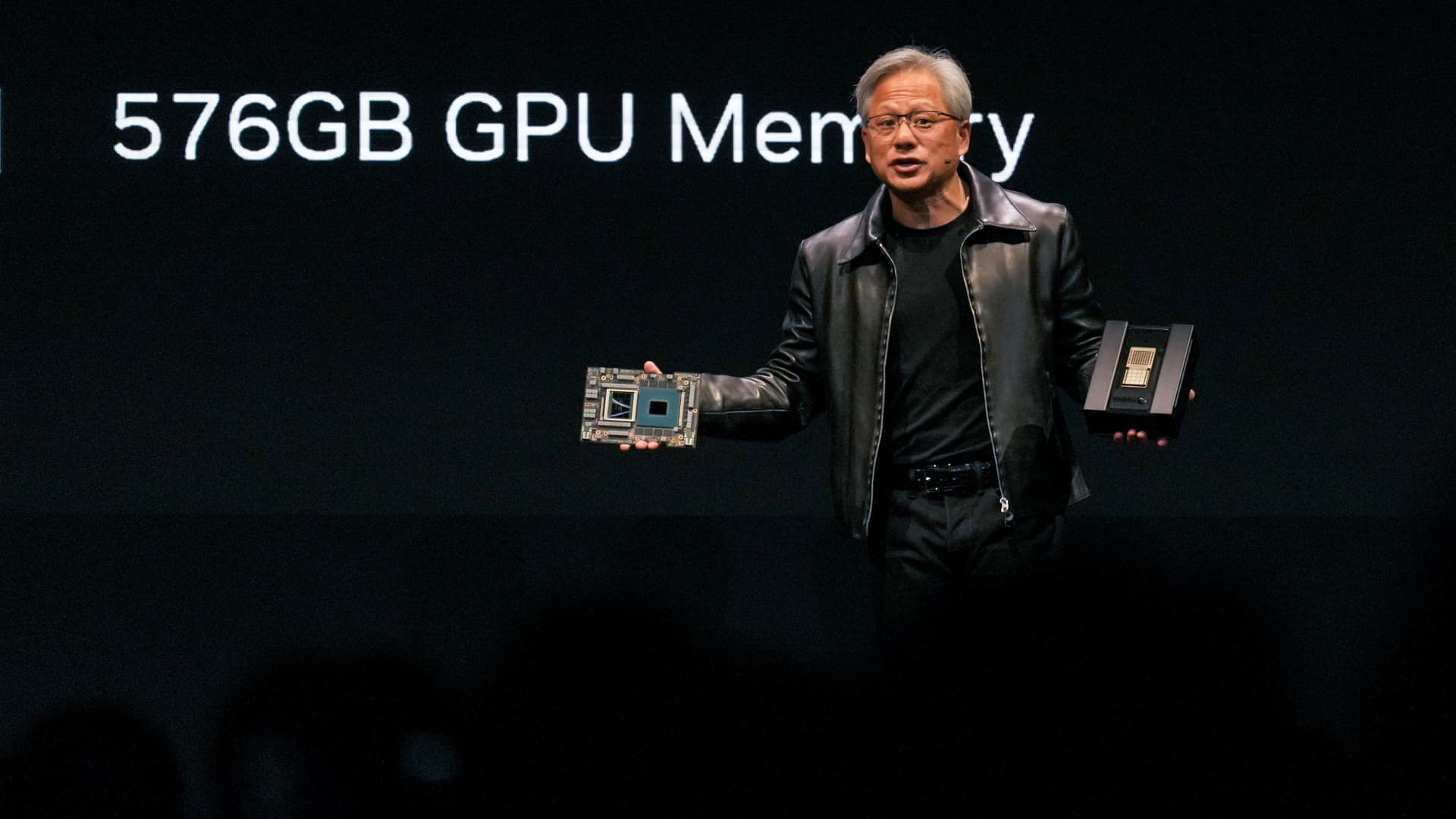

Nvidia reveals new A.I. chip, says costs of running LLMs will ‘drop significantly’::Currently, Nvidia dominates the market for AI chips, with over 80% market share, according to some estimates.

deleted by creator

Nvidia has always been a tech company that also happens to make consumer graphics cards.

deleted by creator

Yeah, I misspoke there, but for most of recent memory they’ve been doing big things besides consumer graphics cards. Nvidia launched its professional oriented graphics Quadro product line in 2000. They launched CUDA architecture in 2006 which opened up parallel processing capabilities of GPUs for use in science and research. They entered the data center and cloud computing market in the early 2010s, and in 2015 they launched the DRIVE product line.

deleted by creator

Until the geforce3/radeon8500 GPUs weren’t programmable, and then you’re looking at the geforce8 in 2007 when CUDA came into it. They’ve been building up to this a long time, including data centre cards. This isn’t a new thing recently, they’re trying to get the most reward out of what they’ve been investing into while the wave lasts.

As much as I’d love to do an upgrade to my PC, gamers definitely don’t own the type of technology. It’s like some people hoping for VR gaming to take off, even if gaming was where a lot of the technologies that it relies upon ‘grew up’, there’s no obligation that VR gaming had to be a thing. Companies definitely don’t care where their money comes from.

They definitely do and I think NVIDIA doesn’t want their money to come customers who DIY build PC towers.

I don’t think NVIDIA minds the money they get from the DIY builders market, but they get a lot more money from OEMs. They shouldn’t neglect the DIY market, though. If the enthusiasts stop recommending their GPUs then big OEMS will eventually drop them too.

AI is just in a gold rush right now. Companies are throwing around piles of money to develop it.

Intel are the real black horse to watch regarding OEMs, if they can get a good CPU+GPU or APU offering bundled up then I can see that being a threat. They’re already kicking AMD’s ass in the CPU department (in terms of sales) for OEM machines because of the good relationships they have with the big builders.

That said, intel also has designs on GPUs for servers. It also relies on Arc not shitting the bed, but you can say that about any company - for the foreseeable future I don’t think they need to be high-end, just competent and with a pricing wedge between them and nvidia/AMD.

And before that it was a bit mining company, with a side line of gaming graphics hardware.

deleted by creator

It’s always been that way. Whether it’s been AI or something else. Nothing wrong with that.

Not all bad, compared to crypto the vector transformations done for ml are relatively similar to those done by graphics processing. So any innovations on the ml front will probably yield improvements in graphics.

Company focusing on their profits and not my 4k dlss witcher 3 ?!?!