Don’t be a fan of one or the other, just get what’s more appropriate at the time of buying.

Ugh. Can I just say how much I fucking HATE how every single fucking product on the market today is a cheap, broken, barely functional piece of shit.

I swear to God the number of times I have to FIX something BRAND NEW that I JUST PAID FOR is absolutely ridiculous.

I knew I should’ve been an engineer, how easy must it be to sit around and make shit that doesn’t work?

Fucking despicable. Do better or die, manufacturers.

Most of the time, the product itself comes out of engineering just fine and then it gets torn up and/or ruined by the business side of the company. That said, sometimes people do make mistakes - in my mind, it’s more of how they’re handled by the company (oftentimes poorly). One of the products my team worked on a few years ago was one that required us to spin up our own ASIC. We spun one up (in the neighborhood of ~20-30 million dollars USD), and a few months later, found a critical flaw in it. So we spun up a second ASIC, again spending $20-30M, and when we were nearly going to release the product, we discovered a bad flaw in the new ASIC. The products worked for the most part, but of course not always, as the bug would sometimes get hit. My company did the right thing and never released the product, though.

It’s almost never the engineers fault. That whole Nasa spacecraft that exploaded was due to bureaucracy and pushing the mission forwards.

Planned Obsolescence is a real problem. But Intel in this situation is just straight up incompetence.

Capitalism: “Make as much as possible as fast as possible”

Capitalism: “Growth or die!”

Earth: I mean… If that’s how it’s gotta be, you little assholes🤷👋🔥

It’s kind of gallows hilarious that for all the world’s religions worshipping ridiculous campfire ghost stories, we have a creator, we have a remarkable macro-organism mother consisting of millions of species, her story of hosting life going back 3.8 billion years, most living in homeostasis with their ecosystem.

But to our actual, not fucking ridiculous works of lazy fiction creator, Earth, we literally choose to treat her like our property to loot, rape, and pillage thoughtlessly, and continue to act as a cancer upon her eyes wide open. We as a species are so fucking weird, and not the good kind.

AMD fans be like:

you know, im kinda a pc fan myself…

This keeps getting slightly misrepresented.

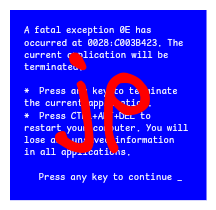

There is no fix for CPUs that are already damaged.

There is a fix now to prevent it from happening to a good CPU.

But isn’t the fix basically under clocking those CPU?

Meaning the “solution” (not even out yet) is crippling those units before the flaw cripples them?

They said the cause was a bug in the microcode making the CPU request unsafe voltages:

Our analysis of returned processors confirms that the elevated operating voltage is stemming from a microcode algorithm resulting in incorrect voltage requests to the processor.

If the buggy behaviour of the voltage contributed to higher boosts, then the fix will cost some performance. But if the clocks were steered separately from the voltage, and the boost clock is still achieved without the overly high voltage, then it might be performance neutral.

I think we will know for sure soon, multiple reviewers announced they were planning to test the impact.

Thanks for the clarification

That was the first “Intel Baseline Profile” they rolled out to mobo manufacturers earlier in the year. They’ve roll out a new fix now.

Not out yet. But you can manually set your clocks and disable boost.

Not out yet.

Actually the 0x129 microcode was released yesterday, now it depends on which motherboard you have and how quickly they release a bios that packages it. According to Anandtech Asus and MSI did already release before Intel made the announcement. I see some for Gigabyte and Asrock too.

So, not out yet. At least not fully.

If you prefer being right, rather than just accepting the extra information, then sure let’s go with that.

im a fan of no corporation especially not fucking amd, but they have been so much better than intel recently that im struggling to understand why anyone still buys intel

Most of the shopping I’ve been helping people with lately has been for laptops. And while there are slightly more AMD options then before laptops are still dominated by Intel for the most part. Especially if you’re trying to help someone pick something while on a tighter budget.

Of all the CPU and GPU manufacturers out there, AMD is the most consistently pro-consumer with the least corporate fuckery, so I take mighty exception at your ‘especially not fucking amd’ comment.

Researchers discover potentially catastrophic exploit present in AMD chips for decades

They’re both very flawed

Despite being potentially catastrophic, this issue is unlikely to impact regular people.

Doesn’t seem very similar to me.

Sounds like some precious and sweet intelboi feels bad holding the bag…

Ryzen gang

My 7800x3d is incredible, I won’t be going back to Intel any time soon.

deleted by creator

Me who bought AMD cpu and gpu last year for my new rig cause fuck the massive mark up for marginal improvement on last gen stats.

tldr: Flaw can give a hacker access to your computer only if they have already bypassed most of the computer’s security.

This means continue not going to sketchy sites.

Continue not downloading that obviously malicious attachment.

Continue not being a dumbass.

Proceed as normal.

Because if a hacker got that deep your system is already fucked.

For CPUs nothing beats AMD

Honestly even with gpus now too. I was forced to team green for a few years because they were so far behind. Now though, unless you absolutely need a 4090 for some reason, you can get basically the same performance from and, for 70% of the cost

I disagree. Processing power may be similar, but Nvidia still outperforms with raytracing, and more importantly DLSS.

Whats the point of having the same processing power, when Nvidia still gets more than double the FPS in any game that supports DLSS

FSR exists, and FSR 3 actually looks very good when compared with DLSS. These arguments about raytracing and DLSS are getting weaker and weaker.

There are still strong arguments for nvidia GPUs in the prosumer market due to the usage of its CUDA cores with some software suites, but for gaming, Nvidia is just overcharging because they still hold the mindshare.

I had the 3090 and then the 6900xtx. The differences were minimal, if even noticeable. Ray tracing is about a generation behind from Nvidia to and, but they’re catching up.

As the other commenter said too fsr is the same as dlss. For me, I actually got a better frame rate with fsr playing cyberpunk and satisfactory than I did dlss!

Are you just posting this under every comment? This isn’t even a fraction as bad as the Intel CPU issue. Something tells me you have Intel hardware…

Glad my first self-built PC is full AMD (built about a year ago).

Screw Intel and Nvidia

7700X is what it was built with

Thank God i haven’t bought one with this. I was about to buy one.

bro think he amd gpu

You’re an AMD fan? Will you admit AMD is having just about the same exact problem?

Researchers discover potentially catastrophic exploit present in AMD chips for decades

Just goes to show fanboying for a company is bad for the industry and for yourself.

You should work for userbenchmark, if you’re not already. You got what it takes, kid

I’m not up to speed on the discovery you linked. It appears to be a vulnerability that can’t be exploited remotely? If so, how is this the same as Intel chips causing widespread system instability?

This isn’t the first time such a vulnerability has been found, have you forgotten spectre/meltdown? Though this is arguably not nearly as impactful as those because it requires physical access to the machine.

Your fervour in trying to paint this as an equivalent problem to Intel’s 13th and 14th gen defects, and implication that everyone else are being fanboys, is just telling on yourself mate. Normal people don’t go to bat like that for massive corpos, only Kool aid drinkers.

Buddy, Intel ain’t a woman and even if they were they’d never fellate you.