I had a post of mine flagged for multiple days on there because it had an illustration of a woman in a full length wool coat completely covering her and not in any way sexual. Shit is so stupid

One the biggest problems with the internet today is bad actors know how to manipulate or dodge the content moderation to avoid punitive consequences. The big social platforms are moderated by the most naive people in the world. It’s either that or willful negligence. Has to be. There’s just no way these tech bros who spent their lives deep in internet culture are so clueless about how to content moderate.

bad actors know how to manipulate or dodge the content moderation to avoid punitive consequences.

People have been doing that since the dawn of the internet. People on my old forum in the 90s tried to circumvent profanity filters on phpBB.

Even now you can get round Lemmy.World filters against “fag-got” by adding a hyphen in it.

Nothing new under the sun.

How do we know they didn’t type something more explicit to get the result and just change what’s in the search bar? Has anyone verified this?

I actually don’t know, I’m not sure it is possible (I never used Instagram, the search might be auto-submitting for all I know) but intentionally flagging yourself as potential child abuser, for clout, is a bit extreme…

Ignorance is bliss

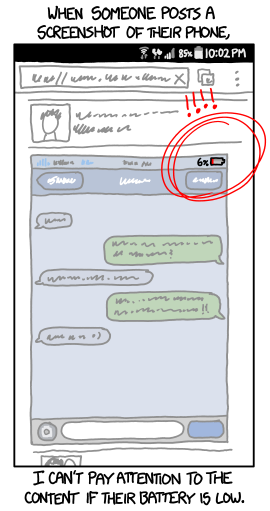

16% is pretty good. the ones at three to one percent are the weirdos.

My skin crawls if it goes below 30%.

You ever seen a phone at 0%?

Removed by mod

I really hate and avoid when my phone switches into battery saver at 15%, so in my mind 16% is like 1%

Based on that one Senate hearing, it looks like big companies like Facebook, Discord and Twitter are aiming for the maximum percent of false positives and false negatives when it comes to CSAM.

The only thing I know about that screenshot is that it used to say “show results anyway” which is probably worse in most cases

with any luck this will destroy them and funnel disgruntled users our way, where the servers are too numerous to ever fully take down and many aren’t even US based anyways

Unfortunately, I don’t think so. Most of the politicians were virtue signaling, asking questions that were impossible and demanding timetables that they weren’t going to get anyway. One woman actually had some half decent data prepared, but I don’t think anybody else was really taking it seriously.

Now if there was some legislation passed, specifically stuff that wasn’t KOSA, that would be something else. KOSA seems prepped to simply destroy free speech on the internet, and it would mostly harm smaller social media networks that don’t have lawyers and around-the-clock moderators to police every single comment and post.

I’m fine with abducting children for a Super-Soldier program. But I draw the line at having photos of them on Instagram. Honestly, a deserved warning. Be better 👏

This should be obvious. Photos of classified military assets shouldn’t be posted online.